November 17, 2020

by Carla Hay

Directed by Shalini Kantayya

Some language in Mandarin with subtitles

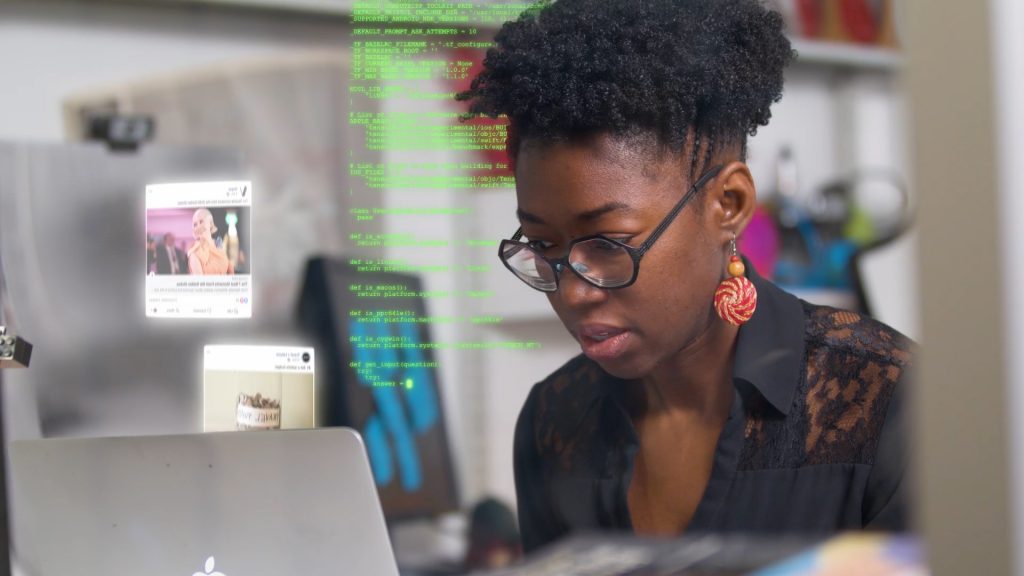

Culture Representation: Taking place in various parts of the United States, the United Kingdom, and China, the documentary “Coded Bias” features a predominantly female, racially diverse group (white people, African Americans and Asians) of academics and activists discussing racial and gender discrimination in computer algorithms and facial recognition technology.

Culture Clash: Several activists are fighting for legislation that prohibits or limits the use of big data that violates people’s rights or invades their privacy.

Culture Audience: “Coded Bias” will appeal primarily to people who are interested in issues over how technology and personal are data being used to profile, manipulate or control people.

With all the video surveillance and tracking of Internet activities that exist in the world, it would be extremely naïve to think that human prejudices and discrimination don’t play a role in how people’s lives are being monitored by computer technology. The excellent documentary “Coded Bias” (directed by Shalini Kantayya) takes an unflinching look at how this type of bigotry has a real impact on people’s lives. “Coded Bias” also shows what people can do to fight back against abuse of power when computer data and algorithms are used to invade privacy or violate people’s rights. (Algorithms are formulas that profile people, based on their past computer activities.)

Simply put: Computer algorithms and facial recognition technology can discriminate against people based on their race, gender or other identifying characteristic, which can affect the ability for people to get jobs, housing, loans or other resources. And the discrimination can go even further, by targeting people as likely perpetrators of crimes, based on their race or what they look like. The “Big Brother” concept, which was science fiction in George Orwell’s “1984” novel, has been a reality for quite some time.

Anytime that people sign up for social media, they allow the social-media platform to sell the data that users put on these social-media platforms. Photos on social media become part of secret profiles that these companies use for whatever they want. For example, Facebook’s controversial data-mining practices are well-documented. But those are just the practices that the media have reported. Think about what’s not being reported.

Similarly, law enforcement departments around the world are using video surveillance cameras on streets and in homes to profile people. Companies that sell home-surveillance video equipment have access to surveillance data that the companies can then sell to law enforcement. And it’s completely legal to sell this data, because it’s usually in the fine print that customers sign when they buy the equipment.

These are the kinds of covert activities that the average citizen doesn’t know about but “Coded Bias” exposes. Data gathering isn’t going to go away, but the documentary’s aim is to make the public more aware of these discrimination problems so that people won’t let governments or big corporations get away with abusing their powers in technology. It’s easier said than done. A lot depends on what type of government is setting the policies. In China, for example, people who want to sign up for Internet access are required to send photos of themselves to the government before access is granted.

Joy Buolamwini is an activist who founded Algorithm Justice League to raise public awareness of bigotry in computer technology. Algorithm Justice League also educates people on how to pressure lawmakers to address and eventually legislate this ongoing problem. Buolamwini, who is African American, found out firsthand how pervasive racism can be in computer technology that is erroneously touted as “unbiased and objective.” When she was a Ph. D. candidate at MIT Media Lab, Buolamwini created an Aspire Mirror, and she got computer vision software that was supposed to track her face.

But when she looked in the Aspire Mirror, the device wouldn’t recognize her face until she put on a white mask. (In the documentary, she demonstrates the software’s bias in how it refuses to recognize her face until she “faked” a lighter skin tone by putting on a white mask.) “That’s when I started looking into issues of bias that can creep into technology,” she says. Upon further investigation, Buolamwini noticed that the Aspire Mirror’s software image detectors were mostly of white males or people with light skin.

Why does this bias exist? As Buolamwini explained in her 2019 testimony about facial recognition technology before the U.S. House of Representatives, most computer technology is created by white males, who might have an unconscious or conscious bias that gives preference to people who look like them. “Coded Bias” includes some footage of these congressional hearings (with Alexandria Ocasio-Cortez asking a few questions), and viewers will get the impression that most of these people in Congress weren’t aware of how rampant this problem is until these hearings. The documentary shows that before the hearings, Buolamwini consulted with two officials from the Georgetown University’s Center on Privacy and Technology: founding director Alvaro Bedoya and senior associate Clare Garvie.

Although bias in algorithms and facial recognition technology can affect people worldwide, “Coded Bias” wisely chose not to go to as many countries as possible, because then the documentary would run into problems of biting off more than it could chew. (This is a feature film, not a docuseries.) Instead, “Coded Bias” focuses on three countries in particular where technology bias is very prevalent: the United States, China and the United Kingdom.

Amy Webb, a futurist and author of “The Big Nine,” mentions in the documentary that there are currently only nine companies in the world that are major players in building artificial intelligence (A.I.) technology. Six of those companies are based in the U.S. (Amazon, Apple, Facebook, Google, IBM and Microsoft), while the other three companies are based in China (Alibaba, Baidu and Tencent).

Webb sees a big difference in A.I. technology in the U.S. and in China, because A.I. technology is being controlled by profit-driven businesses in the U.S. and by a Communist government in China: “It is being developed along two different tracks. China has unfettered access to everybody’s data … Conversely, in the United States, we have not seen a detailed point of view on artificial intelligence. It is being developed for commercial applications to earn revenues.”

Webb adds, “I would prefer to see our Western democratic ideals baked into our A.I. systems of the future. But it doesn’t seem like that’s probably happening.” Later in the documentary, Webb says of the profiling that goes in data gathering: “We are all being scored … The difference is that China is transparent about it.” Ravi Naik, a human-rights lawyer in the United Kingdom, has this to say about anyone in the world who has a cell phone or uses the Internet: “You are volunteering information every aspect about your life to a very small set of companies.”

In the United Kingdom, “Coded Bias” shows the work of Big Brother Watch UK, an activist group that pushes back against abuses of video surveillance and facial recognition technology. The documentary shows how Big Brother UK activists (led by Big Brother Watch UK director Silkie Carlo) confronted plainclothes police officers in London over the use of facial recognition technology. The police set up a video camera on top of an unmarked van to record people without their permission (which is legal to do on a public street) and stopped people for questioning if the police felt that any of these random passerby faces matched a database of photos of criminal suspects.

The documentary catches on camera what happened to two people who were the target of this surveillance. One man was detained and questioned by police simply because he saw the video surveillance and tried to cover his face with his sweater. When he angrily objected to police questioning him, he was given a ticket. On another occasion, a teenage boy was stopped, fingerprinted and questioned by police simply because they thought he looked like a criminal in their database. In both situations, the detainees were not arrested, but the police’s aggressive tactics were prompted because of facial recognition surveillance.

During the confrontation with the man who tried to cover his face, Baroness Jenny Jones, who’s a member of the U.K. Parliament, happened to be nearby with Carlo. Jones and Carlo entered the discussion and scolded the police officers for violating people’s rights and pointed out that the facial recognition software is highly inaccurate. One of the officers admitted during the argument that the software is incorrect most of the time. Later in the documentary, Jones said she found out that she was on a government watch list.

Zeynep Tufekci, author of “Twitter and Teargas,” mentions how facial recognition technology is used in many countries to profile and track people who are involved in street protests, even if they are non-violent protests. She says that in Hong Kong, for example, protesters often us laser pointers to mess with the facial recognition technology that they know is being used on them.

Cathy O’Neil, author of “Weapons of Math Destruction,” explains why she wrote the book and is involved in activism against abuse of big data: “I realized that mathematics was used as a shield for corrupt practices … I’m very scared about this blind faith that we have in big data. We need to constantly monitor every process for bias.”

Buolamwini mentions in the documentary that she was inspired to start Algorithm Justice League after she met O’Neil at a book signing. Buolamwini and O’Neil, who have become friends, are shown together in Washington, D.C., before Buolamwini testified before Congress. Buolamwini and O’Neil are the people who are featured the most prominently in “Coded Bias,” since they aren’t just talking heads. The movie also shows some of their home life too.

“Coded Bias” wants people to know that problems with biased algorithms and facial recognition technology aren’t just about people being targeted for pesky ads on the Internet. There are very serious consequences that could affect people’s livelihood. And in some cases, people are unfairly targeted as criminals based on this biased technology.

“Coded Bias” includes an interview with Daniel Santos, a Houston sixth-grade teacher who was fired because of a controversial algorithm evaluation system that rated him as a bad teacher, based on data that the school refused to reveal. He filed a wrongful termination federal lawsuit that was settled out of court in 2017. The data and algorithm used in his termination still remain a secret.

The documentary also includes interviews with some apartment tenants of Atlantic Plaza Towers in New York City’s Brownsville neighborhood of Brooklyn. These tenants fought back against the landlord Nelson Management Group’s attempt to replace their keys with a facial recognition system. Most of the residents in the building are people of color, and they felt that the facial recognition system was racially biased because the landlord wasn’t trying to implement this facial recognition system in any of his apartment buildings where the majority of tenants are white.

Tranae Moran, one of the Atlantic Plaza residents, shows in the documentary some examples of surveillance photos that residents would receive from the apartment management if management suspected them of being up to no good, just because these residents were congregating in hallways or the apartment lobby. Tenants would receive “infractions” from management for “loitering” in their own building.

Icemae Downes, one of the Atlantic Plaza tenants who vehemently protested the facial recognition system, comments in the documentary: “What do we do to animals? We put chips in them so we can track them. I feel like I, as a human being, should not be tracked. I am not a robot. I am not an animal, so why treat me like an animal? I have rights!”

The controversy over the landlord’s attempt to put a facial recognition system in Atlantic Plaza Towers got so much negative publicity that the landlord eventually dropped those plans. But what would have happened if the tenants stood by and did nothing? What would have happened if the media didn’t report how much the video surveillance was being used against tenants who were being singled out and punished for simply gathering non-violently in a lobby?

Virginia Eubanks, author of “Automating Inequality,” says in the documentary: “The most punitive, most invasive, most surveillance-focused tools that we have, they go in poor and working communities first. If they work … they get ported out to other communities.”

And this should come as no surprise to anyone who sees that certain people tend to get preference in being hired for certain jobs: It’s mentioned in the documentary that Amazon had an AI recruiting tool that discriminated against women for software engineer jobs, by automatically rejecting résumés that identified the job applicants as women. Amazon eventually stopped using this biased recruiting tool, but how long would Amazon have kept this discriminatory practice if it had not been exposed by the media? We’ll never know.

Buolamwini doesn’t mince words when she says: “The progress made in the Civil Rights Era could be rolled back in the guise of machine neutrality … Left unregulated, there’s no kind of recourse if this power is abused.” She has this to say about the unregulated American companies involved in gathering data on people, without the companies being held accountable for how they use the data: “It is, in essence, a Wild Wild West.”

One of the things that’s immediately noticeable about “Coded Bias” is that the filmmakers definitely made an effort to go against a typical bias to interview mostly men for a documentary about technology. Most of the interviewees in “Coded Bias” are women, and many of them have doctorate degrees. Other people interviewed in the documentary include Meredith Broussard, author of “Artificial Intelligence”; Deborah Raji, Partnership in A.I. research fellow; Safiya Umoja Noble, author of “Algorithms of Oppression”; Timnit Gebru, technical co-lead of Ethical A.I. team at Google; criminal justice activist LaTonya Myers; attorney Mark Houldin; Wang Jia Jia, a resident of Hongzhou, China; and Griff Ferris of Big Brother Watch U.K.

“Coded Bias,” which had its world premiere at the 2020 Sundance Film Festival, was completed before the COVID-19 pandemic. But if the movie had been made during the pandemic, it probably would have included information about how private medical information is being used to profile people for COVID-19 contact tracing. Social distancing during the pandemic has also led to a huge increase in using videoconference platforms, with Zoom as a popular platform, even though Zoom has faced controversies over breaches of security and privacy invasion of its customers.

There’s an updated epilogue in the movie that mentions that in June 2020, Amazon announced a one-year pause on facial recognition technology, and the U.S. House of Representatives introduced bill legislation to ban federal use of facial recognition. Also in June 2020, Amazon, IBM and Microsoft said that they would stop selling facial recognition data to law enforcement. However, the epilogue mentions that there is still no U.S. federal legislation on algorithms.

“Coded Bias” presents a clear and well-demonstrated viewpoint that it’s up to the public to be vigilant and put pressure on technology companies and the government to be held accountable for how they gather big data and abuse their power with this data. Otherwise, people give too much power over to these entities, and personal freedoms are put at severe risk.

7th Empire Media released “Coded Bias” in select U.S. virtual cinemas on November 11, 2020.